Evolutionist: Okay, you have persuaded me that in order for life to kick off, intelligence is required. I accept ID argumentation regarding the necessary minimum complexity of a protobiotic cell.

However, I think that unguided evolution adequately explains the observed biological diversity by natural selection over random variation given a self-replicating biosystem to start with. There is no epistemic need to invoke the interference of intelligent actors beyond the start of life to account for the biodiversity we see. Otherwise, it would have contradicted Occam's rule.

ID proponent:

The argumentation of design inference relating to the start of life stays the same in relation to biological evolution that is supposedly responsible for the diversity of biological forms descending from the same ancestor. Qualitative and quantitative differences in the genomes of, say, prokaryotes and eukaryotes (e.g. bacteria, plants and humans) or humans and microorganisms are so big that biological common ancestry in the context of mutations, drift and natural selection is precluded as statistically implausible on the gamut of the terrestrial physico-chemical processes taking into consideration the rates of these processes and a possible realistic maximum number of them over the entire natural history

[Abel 2009].

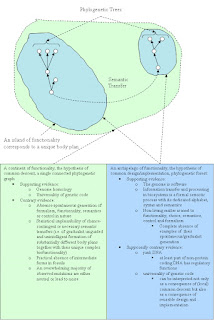

True, genomic adaptations do occur and can be observed. However, this does not solve the problem of functionality isolation: complex functionality even in highly adaptable biosystems is necessarily highly tuned so represents islands of function in configuration space. The theory of global common descent posits that this problem does not exist and that all existing or extinct bioforms are nodes of a single phylogenetic graph. However, careful consideration of functionality isolatedness lends grounds for doubt about the tenability of global common descent.

Organisms are complex self-replicating machines which consist of many interrelated subsystems. As machines, biosystems function within certain ranges of their many parameters. The parameter values in a majority of practical cases of complex machines belong to relatively small isolated areas in the configuration space. So even considering the high level of adaptability of living systems, in practice significant functional changes leading to substantial phenotypic differences require deep synchronised reorganisations of all relevant subsystems. Gradualist models similar to [Dawkins 1996] using only random and law-like factors of causation (i.e. random changes in genotype coupled with unguided selection) cannot satisfactorily account for such complex synchronised changes in the general case given the terrestrial probabilistic bounds on physico-chemical interactions. Consequently, the problem of functionality islands vs functionality continent still needs to be addressed.

The main claim of the empirical theory of design inference is that in practice a big enough quantity of functionally specified information (FSI, [Abel 2011]) cannot plausibly emerge spontaneously or gradualistically but statistically strongly point to choice contingent causality (design). Substantially different morphologies (body plans) are explained by substantial differences in the associated FSI.

Organisms are complex self-replicating machines which consist of many interrelated subsystems. As machines, biosystems function within certain ranges of their many parameters. The parameter values in a majority of practical cases of complex machines belong to relatively small isolated areas in the configuration space. So even considering the high level of adaptability of living systems, in practice significant functional changes leading to substantial phenotypic differences require deep synchronised reorganisations of all relevant subsystems. Gradualist models similar to [Dawkins 1996] using only random and law-like factors of causation (i.e. random changes in genotype coupled with unguided selection) cannot satisfactorily account for such complex synchronised changes in the general case given the terrestrial probabilistic bounds on physico-chemical interactions. Consequently, the problem of functionality islands vs functionality continent still needs to be addressed.

The main claim of the empirical theory of design inference is that in practice a big enough quantity of functionally specified information (FSI, [Abel 2011]) cannot plausibly emerge spontaneously or gradualistically but statistically strongly point to choice contingent causality (design). Substantially different morphologies (body plans) are explained by substantial differences in the associated FSI.

It is true that in principle it is possible to gain automatically some uncertainty reduction as a result of information transfer over a Shennon channel. E.g. it can happen as a result of a random error correction such as we see in biosystems, but it always happens given an initial and long enough meaningful message,

which statistically rules out combinations of chance and law-like necessity.

Nevertheless,

information transfer results not only in uncertainty reduction. The problem is that information always carries a semantic cargo in a given context, which is something Shennon's theory does not address. By the way, Shennon himself did not want to label his theory as theory of information for this reason [ibid].

The principal problem of the theory of evolution is the absolute absence of empirical evidence of spontaneous (i.e. chance contingent and/or necessary) generation of semantics in nature. We do not see in practice a long enough meaningful message automatically generated without recourse to intelligence. Nor do we ever see a spontaneous drastic change of semantics given a meaningful initial message. A drastic change of rules governing the behaviour of a system is only possible given specialised initial conditions (intelligent actors and meta-rules prescribing such a change). How then is it possible by way of unguided variations coupled with law-like necessity?

What we can see is small adaptational variations of the already available genetic instructions as allowed by the existing protocols in biosystems. By the way, the known existing genetic algorithms are no refutation of our thesis. This is simply because any such algorithm is (or can be cast to) a formal procedure whereby a function is optimised in a given context. Consequently, any genetic algorithm guides the system towards a goal state and is therefore teleological. Contemporary science knows no ways of spontaneous emergence of formalism out of chaos.

The principal problem of the theory of evolution is the absolute absence of empirical evidence of spontaneous (i.e. chance contingent and/or necessary) generation of semantics in nature. We do not see in practice a long enough meaningful message automatically generated without recourse to intelligence. Nor do we ever see a spontaneous drastic change of semantics given a meaningful initial message. A drastic change of rules governing the behaviour of a system is only possible given specialised initial conditions (intelligent actors and meta-rules prescribing such a change). How then is it possible by way of unguided variations coupled with law-like necessity?

What we can see is small adaptational variations of the already available genetic instructions as allowed by the existing protocols in biosystems. By the way, the known existing genetic algorithms are no refutation of our thesis. This is simply because any such algorithm is (or can be cast to) a formal procedure whereby a function is optimised in a given context. Consequently, any genetic algorithm guides the system towards a goal state and is therefore teleological. Contemporary science knows no ways of spontaneous emergence of formalism out of chaos.

We believe that it is the semantic load that determines the hypothetical passage of a biosystem between functional islands in the configuration space. If this is true, then each such semantic transfer needs purposive intelligent interference. Interestingly, Darwin himself spoke about the descent of all biological forms from one or several common ancestors [Darwin 1859]. If we interpret his words in the sense of a phylogenetic forest (or more precisely - considering lateral genetic transfer - a system of connected graphs with a low percentage of cycles), we can agree with Darwin on condition that each phylogenetic tree determines a separate unique body plan

(fig.1).

Data available today corresponds much better to the hypothesis of common design/implementation than to the hypothesis of global common descent.

Finally, as regards Occam's rule we need to say the following. According to Einstein's apt phrase, one must make everything as simple as possible, but not simpler. A crude over-simplification which does not take into account some crucial sides of the case in point can never be considered even correct enough, to say nothing of being more parsimonious than complex and more detailed models. Various theories of (unguided) evolution miss out on the fact that microadaptations cannot be extrapolated to explain the body plan level differences. This is because to successfully modify morphology one needs a deep synchronised reorganisation of a whole number of interdependent functional subsystems of a living organism where gradualism does not stand the statistical and information-theoretic challenge. The complexity of such reorganisations grows with the complexity of the biological organisation. On the other hand, the capabilities of functional preadaptations are limited in practice.

That said, even if we do not take into consideration the above complexity and synchronisation challenge, the principle untenability of macroevolution (i.e. untenability of theories where evolution is viewed as the exclusive source of genuine novelty) is in statistical implausibility of emergence hypotheses which assume gradual "crystallisation" of cybernetic control, semantics and formalism in biosystems [Abel 2011].

Sources

|

| Figure 1. A comparative analysis of two hypotheses: common descent vs. common design/implementation. Note that common design/implementation does not exclude local common descent (click to enlarge). |

Data available today corresponds much better to the hypothesis of common design/implementation than to the hypothesis of global common descent.

- Firstly, what was thought to be genetic "junk" (i.e. non-protein coding DNA, which constitutes a majority of the genome) and seen as evidence of unguided evolution at work, is at least partly no junk at all but rather carries regulatory functions in processing genetic information [Wells 2011].

- Secondly, the universal homology of the genome can now be interpreted as a consequence of not only common ancestry but also common design/implementation and code reuse in diffent contexts in much the same way as we copy&paste or cut&paste pieces of textual information. Probably the best analogy in this sense could be the process of software creation whereby novelty arises not as a result of random variations on a per-symbol basis which is then fixed by selection based on "whether it works". Clearly, a majority of such mutations will undoubtedly be deleterious to the functioning of the code as a single integrated whole. On the contrary, novelty in software comes about as a result of well thought through functional unit-wise discrete code modifications.

- Thirdly, common design is indirectly supported by the sheer absence of examples of spontaneous generation of formalism, functionality, semantics or control (i.e. cybernetic characteristics of biosystems) in non-living nature.

Finally, as regards Occam's rule we need to say the following. According to Einstein's apt phrase, one must make everything as simple as possible, but not simpler. A crude over-simplification which does not take into account some crucial sides of the case in point can never be considered even correct enough, to say nothing of being more parsimonious than complex and more detailed models. Various theories of (unguided) evolution miss out on the fact that microadaptations cannot be extrapolated to explain the body plan level differences. This is because to successfully modify morphology one needs a deep synchronised reorganisation of a whole number of interdependent functional subsystems of a living organism where gradualism does not stand the statistical and information-theoretic challenge. The complexity of such reorganisations grows with the complexity of the biological organisation. On the other hand, the capabilities of functional preadaptations are limited in practice.

That said, even if we do not take into consideration the above complexity and synchronisation challenge, the principle untenability of macroevolution (i.e. untenability of theories where evolution is viewed as the exclusive source of genuine novelty) is in statistical implausibility of emergence hypotheses which assume gradual "crystallisation" of cybernetic control, semantics and formalism in biosystems [Abel 2011].

Sources

- David L. Abel (2009), The Universal Plausibility Metric (UPM) & Principle (UPP). Theoretical Biology and Medical Modelling, 6:27.

- David Abel (2011), The First Gene.

- Charles Darwin (1859), On the Origin of Species.

- Jonathan Wells (2011), The Myth of Junk DNA.

- Blog and forum UncommonDescent.com